Large-Eddy Simulation Code for City-Scale Environments

eCSE05-14Key Personnel

PI/Co-I: Dr Zheng-Tong Xie - University of Southampton

Technical: Dr Vladimir Fuka - University of Southampton and Dr Arno Proeme - EPCC, University of Edinburgh

Relevant documents

eCSE Technical Report: Large-Eddy Simulation Code for City Scale Environments

Project summary

The project aimed to enhance the performance and capabilities of the ELMM large-eddy simulation software and the associated software library PoisFFT. The code is used for simulation of wind flow in the atmospheric boundary layer, and transport and dispersion of pollutants in areas with complex geometry, mainly cities.

The main target of the project was to enable simulations of whole cities, i.e. areas with a horizontal dimension of 10 km or more, while maintaining high resolution in the areas of interest.

The strategy chosen for this task was to first perform optimization of the current code and of its parallelization. The second step is then the development of a new feature - called grid nesting or domain nesting. Grid nesting allows us to select a certain part of the city and solve the problem in that area on a grid with several times higher resolution, while using the flow from the whole city domain as a boundary condition.

During the optimization, profiling was used to identify the parts of the code where most time was spent. Several loops were identified where if conditions in every iteration caused a slowdown, and a different solution was implemented.

The MPI parallelization used to distribute the workload on large clusters and supercomputers was optimized mainly by re-writing the exchange of boundary information between the neighbouring processes. By using non-blocking MPI communication it was possible to avoid unneeded synchronization and let the MPI library decide in which order the transfers should be performed.

The OpenMP and MPI parallelization approaches were combined in a hybrid solution where a smaller number of processes communicate with each other using MPI, and each process uses several OpenMP threads.

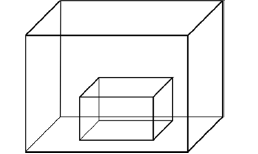

Nested domain inside an outer domain.

The grid nesting capability was implemented using independent groups of processes forming separate MPI communicators and behaving like independent model runs. The domains are organized in a tree with an outer domain on the top containing inner domains which may contain further inner domain themselves. Each inner domain's grid may be finer than its outer grid. The optional two-way nesting allows the feedback of the nested grid to the outer grid.

The work done in this project enables simulations of much larger city-scale problems which are of great interest in areas such as urban air quality and urban climate, and security and safety in urban environments. It enables simulations of the interaction of the rural atmospheric boundary layer with the urban environment, allowing studies of the dispersion of dangerous pollutants in cities and the effects of the urban heat island.

Achievement of objectives

The objectives of the project were the following:

- to implement hybrid parallelisation functionality in the ELMM code to largely improve parallel efficiency on ARCHER and similar supercomputers for modelling city scale environments;

- to implement grid nesting functionality in the ELMM code to model very large spatial domains while maintaining high resolution in limited areas of great interest;

- to validate and optimise the synthetic turbulence generation functionality in the ELMM code, including to improve parallel efficiency of this subroutine, to allow the code to model any specific problems;

- to train other ARCHER users to run and test the ELMM code to expand the user community of the code.

Achievements:

- The weak scaling of the optimized code was tested up to 4096 CPU cores. Hybrid MPI/OpenMP version the ELMM itself without the Poisson solver had parallel efficiency of 60 % and is expected to scale further. The Poisson solver scaled consistently with the n*log(n) cost of FFT as t~log100(100 Ncpu) with 55 % efficiency at 4096 CPU. The author of the PFFT library responsible for the transforms demonstrated scaling to much higher CPU counts on a different supercomputer even for pure MPI. We were not able to achieve this in our tests. We were not able to test larger runs on ARCHER.

- During the weak testing, the largest grid size tested was 226 million cells. The test case was taken from project DIPLOS with physical size 96x48x12 building heights in high resolution. Given the average building height in London is about 20m, the physical size is 2km x 1 km. With domain nesting the outer domain can have a coarser resolution and domain of 10 km is achievable.

- The correctness of implementation of the turbulence generator was verified and the generator was used for generation of small scales at the nesting interface and the correctness of this usage was verified again.

- The grid nesting capability was presented by ZT Xie in the UK Urban Working Group workshop, May/2016, MetOffice, Exeter; by V. Fuka in the EGU 2016 conference, Vienna and in the RMets/NCAS workshop, Jul/2016, Manchester; and by ZT Xie in the UK Wind Engineering Conf. Sept/2016, Nottingham. The planned DIPLOS project workshop has been postponed to March 2017, the grid nesting capability and the sharing of the code will be reported and discussed in the DIPLOS workshop.

Summary of the Software

The software ELMM (Extended Large-eddy Microscale Model), hosted at https://bitbucket.org/LadaF/elmm), solves turbulent flow in the atmosphere, optionally with the effects of temperature and moisture stratification. The filtered incompressible Navier-Stokes equations are discretized using the finite volume method. The effects of subgrid turbulence are modelled using several alternative subgrid models.

The pressure-velocity coupling is achieved using the projection method. In this method a discrete Poisson equation is solved. The solution of this elliptic system typically requires a considerable part of the total CPU time. The Poisson equation is solved using the custom library PoisFFT (https://github.com/LadaF/PoisFFT) which uses the discrete fast Fourier transform from the FFTW3 library and its parallel wrapper PFFT (https://github.com/mpip/pfft).

Currently, thanks to this project, ELMM can employ the OpenMP parallelization for shared memory computers, MPI for distributed memory computers, and their hybrid combination.

The model typically needs to be recompiled according to the needs of the user and is not supplied as a pre-compiled ARCHER module. After installing all prerequisites the compilation of ELMM is very simple. With the exception of PFFT and PoisFFT all prerequisites are already installed on ARCHER. However, the most recent version of FFTW on ARCHER removed the OpenMP versions of the library which are required for the OpenMP and hybrid version of ELMM. Therefore, an older version of the FFTW module must be selected on ARCHER.